- Posts: 28

Latency Test for deviation vs ersky vs opentx

- brycej

-

Topic Author

- Offline

I recently got a TS2G and added deviation (from the Aug 1, 2018 build) to my frsky latency test.

Test setup:

Using Frsky_X with 8 channels.

Used ARB generate to flip a switch every 1s on taranis, Switch caused 100% throttle

RX gets the signal and transfers it with SPI/SBUS/fport to FC running betaflight

Betaflight increases dshot throttle values

Capture time when over 200 counts (~140 was idle and 1000 was 50%)

Just did one test right now to a matek411RX that has a spi chip on there.

I was hoping that deviation would be the fastest since you have direct access to the TX chips via spi, but it appears it was slightly slower on average then ersky with the same setup (it did couple samples faster 10.94ms vs 11.37ms). Although much faster then opentx. It seems deviation phases in and out the lowest latency over 30s - maybe a timer phasing in and out? Opentx has a similiar issue but that is from not syncing to the heartbeat on the internal xjt module. Compare a couple of the tabs in the data below if your interested!

Link to the data: docs.google.com/spreadsheets/d/1aTRuUzEI...hHw/edit?usp=sharing

So for a quick comparison of the different TX talking to a spi-RX in betaflight (truncating all the numbers)

Deviation 10-26ms

Ersky 11-23ms

Opentx 16-35ms

Let me know if you have any questions!

Please Log in or Create an account to join the conversation.

- Fernandez

-

- Offline

- Posts: 983

The 10ms, is probably just low as it gets due to Frsky updates in 8 channel mode? (then depending on timing, theoretically expect 10-20ms)

How are these switches sampled? I there may be some capacitance, rc filtering on the switches ?

Please Log in or Create an account to join the conversation.

- brycej

-

Topic Author

- Offline

- Posts: 28

I did a video on my test setup when I was testing opentx/ersky9x on taranis if you wanted to see my test setup.

Quick summary:

I used a square wave ARB generator on 1hz with 0 to 3.3V to trigger one of the switches. In deviation I set the switch to be the throttle output. I used a salae logic analyzer to grab when ARB "switched" the throttle on and then waited to see when the dshot throttle value (betaflight motor output) went up - decoded with salae as well.

Photo of my mixer setup: photos.app.goo.gl/8pRvEV4MjAaQW4UE9

It might be better to use the pulse direct on the ADC for the throttle gimbal, but I didn't do that because I thought other transmitters use PWM gimbals and wanted to have a general test I could use on any transmitter. Also it was much easier to use one of the aux switches. And the way I have it setup, the aux switch should be one of the 4 control channels. I know some protocols prioritize channels 1-4 in the over the air data, so that is why I was sending the data over the throttle channel.

Couple questions on that I could see effect that: Are the switches sampled less frequently then the ADC on the gimbals or are they all sampled at the same rate? Or there some additional processing that happens because it a switch that gets translated into throttle values vs using the throttle gimbal directly? My assumption is the the ADCs are all sampled at the same rate and the same processing happens to all the channels in the mixer.

Please Log in or Create an account to join the conversation.

- brycej

-

Topic Author

- Offline

- Posts: 28

github.com/DeviationTX/deviation/blob/9b...ca/src/target.h#L121

github.com/DeviationTX/deviation/blob/2c...n/stm32/clock.c#L223

So that would be adding a 0-5ms latency to the signal. Seems like it either could be reduced or called right before the packet is sent out around here: github.com/DeviationTX/deviation/blob/3c...frskyx_cc2500.c#L249

to eliminate it completely.

Please Log in or Create an account to join the conversation.

- brycej

-

Topic Author

- Offline

- Posts: 28

Is it sending out 16 channels all the time? Maybe I'm missing something on how `chan_offset` works?

github.com/DeviationTX/deviation/blob/3c...frskyx_cc2500.c#L288

Please Log in or Create an account to join the conversation.

- hexfet

-

- Away

- Posts: 1957

The ADC is read continuously via DMA. The averaging filter and mixers are run every 5ms. There is some commented-out code that ran them after every DMA update. Not sure why it was changed - maybe cpu load. Switches do not go through the ADC but do go through the mixers. The mixer output is what is transmitted by the protocols.

Makes me curious about the latency just from switch input to devo radio output. Will add it to the list...

Please Log in or Create an account to join the conversation.

- brycej

-

Topic Author

- Offline

- Posts: 28

I did some additional testing with a hack to add the mixer calc and ADC to happen right at the top of this function:

github.com/DeviationTX/deviation/blob/3c...frskyx_cc2500.c#L249

That definitely helped to reduce the latency, data here:

docs.google.com/spreadsheets/d/1MlGdLWIP...ypg/edit?usp=sharing

I also did a test with the ARB on the ADC of the throttle. That showed it going faster and with less gitter, so my switch method was adding in some additional lag.

Modded code attached to switch to trigger throttle: 6 - 21ms

Modded code attached directly to throttle: 6 - 16ms

I think I remember the actual overtheair time is ~4.5ms (protocol sends every 9ms) So a 6ms minimum is pretty darn good.

All of these is much much better the opentx 16-35ms!

Please Log in or Create an account to join the conversation.

- Fernandez

-

- Offline

- Posts: 983

Quote hexfet

"For example if the number of channels is set to 12 then channels 1-4 and 8-12 update every 18ms, while 5-7 will update every 9ms"

I do not understand howit works.

By the way I have never understood why we are still sending switches and use full channel bandwith and not just some binary values..….

But with TX and RX modules over SPI, fully firmware controlled, it should be possible make 4 channel high speed data and add some binary encoded/decoded switches ?

All very interesting especially with Betaflight squeezing the best out of the drones…..

Please Log in or Create an account to join the conversation.

- brycej

-

Topic Author

- Offline

- Posts: 28

For the greater then 8 channel updating, it looks like it happens here: github.com/DeviationTX/deviation/blob/ma...frskyx_cc2500.c#L209

If you have 12 channels the 8-12 then will go through that function normally, 13-16 will hit that function.

I think the reason they are sending full channels is probably due to planes that don't use flight controllers. I actually have a dual prop plane with 2 motors and 3 servos, so that needed 5 full channels. I could imagine a even more complex plane with more servos. For quads and planes with FCs it probably isn't ever really needed.

It would be nice to bump up the update rate to 2 or 4ms over the air and send much simpler aux channel data. But I only have 2 quads with spi receivers, the rest are using XSR or X4R, I want to keep using those for as well. Might be able to update opensky to support a new protocol: github.com/fishpepper/OpenSky

Please Log in or Create an account to join the conversation.

- Fernandez

-

- Offline

- Posts: 983

But benefit of SPI, both sides, is that you can easily change the protocol, (no need to flash RX) With CC2500 present on both sides, it is no longer needed to use Frsky scheme, so a 4channel "Fast RaceKraft protocol" + binary switches could be possibility for Betaflight and Deviation ?

Same for maybe a longer range protocol using slow updates, or thinking out of the box, maybe even a variable rate, as is done in Crossfire ?

Both are open source who knows someone, catches up on this.

As the trend is that more and more smaller details are being tuned out and optimized to get quads fly as good as possible.

Lowest latency certainly is one of them.

Please Log in or Create an account to join the conversation.

- Fernandez

-

- Offline

- Posts: 983

Please Log in or Create an account to join the conversation.

- hexfet

-

- Away

- Posts: 1957

Changing the code to run the mixer calculations right before the protocol code is too risky without understanding the time required to calculate the mixers. The time required (and variability of that time) would affect the protocol timing. The max number of mixers is four times the total number of channels (real + virtual). Need testing to determine how much time is used for mixing. Maybe the difference between min and max will be small enough to be ignored.

Within deviation I think "minimizing latency" means minimizing the time between an input change and the change being sent to a radio for transmission. Not sure how best to achieve that, but reviewing the current implementation seems a good place to start.

Beginning at the end, channel data is sent to the radio chip by the protocol code. Each protocol has a (usually fixed) packet period which is the interval between sending new data packets to the radio. The period varies by protocol. It seems obvious that minimum latency would include completing an update of the mixers just before the protocol builds the packet to send. That implies tying the mixer calculation code to the protocol code to match the update timings. Currently the timing of reading inputs and the use of that data by protocol code are independent.

If the mixers require about a constant amount of time then moving that calculation to just before the protocol won't affect the protocol timing much. In fact most protocols seem to have a fairly good tolerance to variations in packet period so some variation is mixer timing is probably tolerable. Just need to be sure the variation is a small percentage of the packet period.

The channel data used by the protocol code is currently updated every 5ms by medium_priority_cb being called by timer interrupt. The timer ISR first calls ADC_Filter to apply a moving average calculation to the raw ADC readings. The average is over 10 samples for every tx except the devo12, which averages 100 samples. Then MIXER_CalcChannels is run, which uses the ADC averaged readings as inputs to the mixers. The mixers update the Channels array. Whenever the current protocol interrupt fires the protocol uses the current Channels array to calculate output values. So there will be some variation in raw ADC update rate between transmitters due to the different number of analog inputs being handled. The averaging filter adds some fixed delay, which is longer on the devo12.

The ADC is run in continuous mode, with DMA updating a memory array of 10 (100 for devo12) values for each analog input. The number of analog inputs varies by transmitter from 4 to 10, plus 2 for temp and voltage. From the F103 datasheet it's about 1us per ADC conversion, so a full update of all raw values takes from 6 to 12 microseconds. Filtering the raw ADC values is not synchronized with DMA, so when calculating the moving average it may have mixed samples of some ADC inputs (i.e. some inputs have the latest update, while others still have 10us old data). Since most protocols have packet periods of thousands of microseconds these timing variations may be insignificant.

There is commented-out code that ran the mixers at the end of every ADC conversion cycle. Anyone know why that approach was abandoned? Maybe because the ADC update rate is much faster than protocol updates, or maybe because mixer calculation takes significant time. And why is the devo12 moving average window 10 times larger than the other transmitters?

tl;dr Seems like there's an opportunity to reduce stick-to-protocol latency within deviation, but needs investigation.

Please Log in or Create an account to join the conversation.

- vlad_vy

-

- Offline

- Posts: 3333

hexfet wrote: And why is the devo12 moving average window 10 times larger than the other transmitters?

It's for filtering digital noise from switching DC-DC voltage regulator. It's specific for Devo 12s with 1S LiPo battery.

Please Log in or Create an account to join the conversation.

- Fernandez

-

- Offline

- Posts: 983

To me the easiest would be kind of oversampling, as both loops are unsynchronised, the variation in the "age" to get fresh calculated data into the protocol loop, are higher. And the protocol just read the latest sample, and many samples in between are basically not transmitted.

Question is how fast can we run the loop f.i. run it 1khz, without overloading the cpu ?

The nicest way would be kind of synchronous mode, that just before the "protocol stream encoder", needs the data, it triggers the loop to take a sample from adc and run the mixers ?

Basically the protocol speed then defines the sample speed of the radio.

Interesting that we look into that, as performance optimisations are very hot topic.....

Please Log in or Create an account to join the conversation.

- brycej

-

Topic Author

- Offline

- Posts: 28

Yeah my hack to up the ADC and mixer calc right before getting sent out was a hack just to test how low the latency can go. Although for frsky_x it appears to be OK, but I wasn't recording the timing on the RX side and I wouldn't notice the occasional dropped packet.

I'm pretty new to the deviation code so let me know if I am reading the code wrong.

The protocol timing is driven off high priority timer.

The mixer and ADC happen on a medium priority interrupt.

DMA is a slightly lower medium priority interrupt

All the button stuff happens on the main while loop.

When I see the `return 9000` in for example the frsky_x protocol functions, it is setting up the next interrupt that will happen 9 ms later. This does not take into account for how long the actually function takes to run before each return? In other words, the 4 main data states on frsky_x take 9000 (adding up all the returns). All the functions in each of those states (such as frskyX_dataframe() ) are not counted toward the timing, so you actually end up a little longer then 9000. It should be pretty close because there isn't a lot of cycles being spent.

But adding the Mixer and ADC calc add a bunch more cycles so you'll end up off more then that? And it is worse if the mixer/adc could be variable because it will cause the total protocol timing to randomly vary. Is the problem? or maybe I'm misreading something.

Please Log in or Create an account to join the conversation.

- hexfet

-

- Away

- Posts: 1957

The function TIMx_ISR() does account for the time taken to run the protocol callback function. The requested delay value is added to the value of the CCR register at the time of the interrupt. So for example with frskyx each callback interrupt occurs 9ms after the previous one regardless of how much time the protocol code takes to run.

Of course this doesn't work if the ISR takes more than 9ms to run. So the concern is if someone is running a model file with the maximum number of most complicated mixers, will running the mixer/adcfilter and protocol code take more time than the packet interval? If running the mixer/adcfilter code plus the protocol takes much less than the packet interval it should be okay to run in the callback interrupt.

There's some debug timing output under the TIMING_DEBUG define but haven't tried it yet. Build with TYPE=dev to enable printf serial port output on the trainer port (115k 8N1).

Please Log in or Create an account to join the conversation.

- hexfet

-

- Away

- Posts: 1957

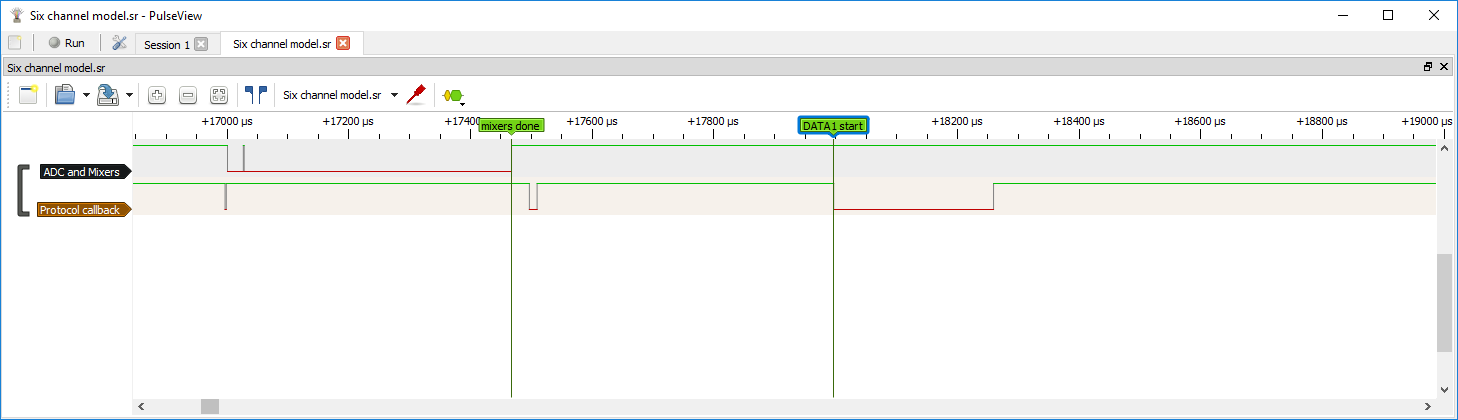

Measured timings on a Devo10 with 4n1 module using FrskyX linked to XSR receiver. Modified the existing debug_timing code because it only measured millisecond resolution and most times of interest are less than that. I made a build that uses the trainer tx and rx pins as gpio, and measured times with a logic analyzer.

The ADC_Filter run time is about 26 microseconds for the six analog inputs on the Devo10. For 10 analog inputs it's probably about 44 µs. Short enough to be ignored till maybe final timings. It's consistently the same value.

Run time for MIXER_CalcChannels varied from about 280 µs for a simple 4channel model to about 1200 µs for a "max mixers" model. The max model has 103 mixers over 16 channels, all set for 13-point smoothed curves with muxtype multiply. This should be close to maximum runtime, though there might be some longer execution paths - difficult to tell which is most resource intensive. The total run time is mostly proportional to the number of mixers regardless of their type.

The FrskyX protocol callback run times for each state while connected were:

DATA1 (send packet): 265 µs

DATA2 (turn amp) : 28 µs

DATA3 (start rcv) : 8 µs

DATA4 (process rcv): 100 µs

These timings are pretty consistent regardless of number of channels or number of telemetry sensors.

The protocol delay between DATA1 and DATA2 is 5200 µs so it seems there's plenty of time to run the adc filter and mixers during the protocol callback. However when I tried this problems cropped up. With a 4 channel model the telemetry stopped working, and with the "Max mixers" model the receiver lost connection. The protocol packet timing is changed too much by the delay of running the adc/mixers as part of building the transmit packet. To avoid changing the protocol timing a better approach is to have the adc/mixer calculations finish just before they're needed by the protocol.

One way to do this is trigger the interrupt that runs the adc/mixer code from within the protocol callback. For proof of concept I modified FrskyX to run the adc/mixers 1 ms before the DATA1 phase. For a typical model this should result in the mixers being completely updated just before the DATA1 callback needs the fresh data. For release the timing could be modified dynamically so the channels are updated just before the data is needed regardless of the number of mixers.

Test build test_latency (e1359b5) has the modification. brycej, not sure if the issues I saw would have affected your measurements. If possible please measure the FrskyX latency in this test build. My guess is latency will be a little more than your previous test, but it will work even with models with many mixers.

Please Log in or Create an account to join the conversation.

- Fernandez

-

- Offline

- Posts: 983

What would be the devo 10 Latency in that case (considering we do not count the "9ms protocol deadtime")

Is this changed "fresh data" method affecting all protocols, or only implemented Frsky ?

Please Log in or Create an account to join the conversation.

- hexfet

-

- Away

- Posts: 1957

In the test build FrskyX is the only protocol that works at all because the 5ms mixer updates are disabled. A real solution will keep the current behavior but add the ability for protocols to schedule the mixer updates. I'd update the "hobby" protocols to reduce latency but likely not the "toy" protocols - too many of them. And personally I don't think a few ms matters to flying feel

For FrskyX the "channel value update" to packet transmit delay would be reduced to under one millisecond. Currently this delay varies from 0 to 5 ms. For analog inputs there's some additional delay due to filtering.

This pic shows the test build timing with a model that has a half-dozen mixers. The first pulse on the top trace is adc filtering, followed by the mixer calculations. The first pulse on the second trace is a new protocol state that requests a mixer update, followed by pulses representing DATA4 and DATA1 run times.

Please Log in or Create an account to join the conversation.

- FDR

-

- Offline

Please Log in or Create an account to join the conversation.

-

Home

-

Forum

-

Development

-

Development

- Latency Test for deviation vs ersky vs opentx